AI-Generated Fake War Images Passed Off as Real

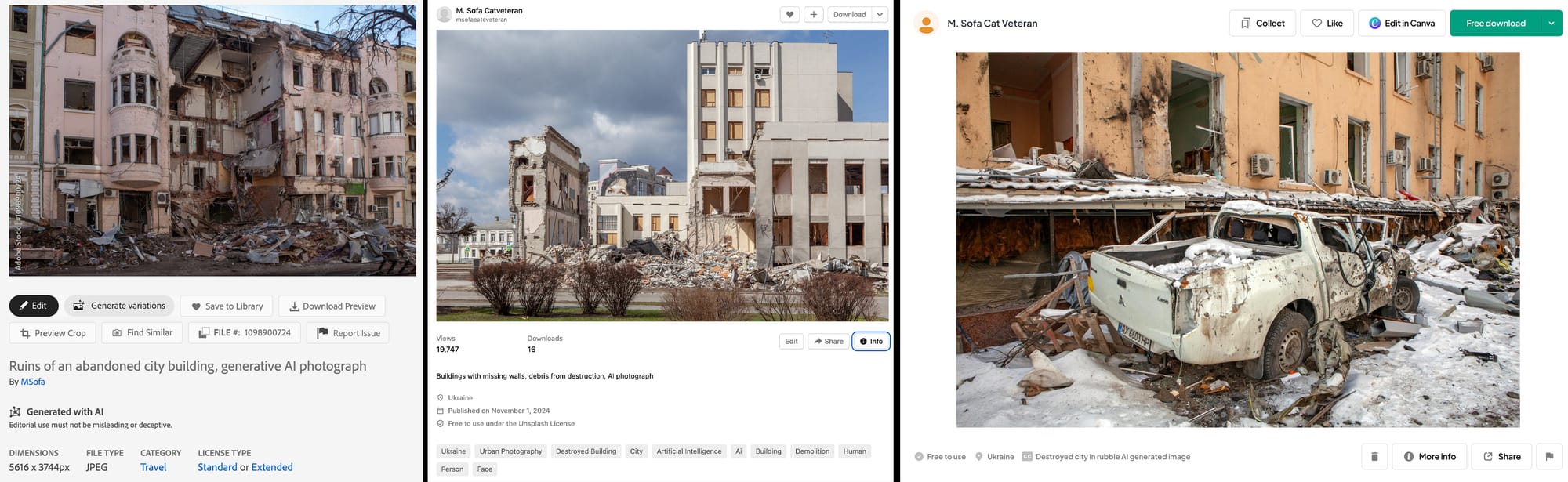

Images depicting war-torn Ukraine are being generated by AI services, sold on stock photo websites and used in media coverage of the conflict.

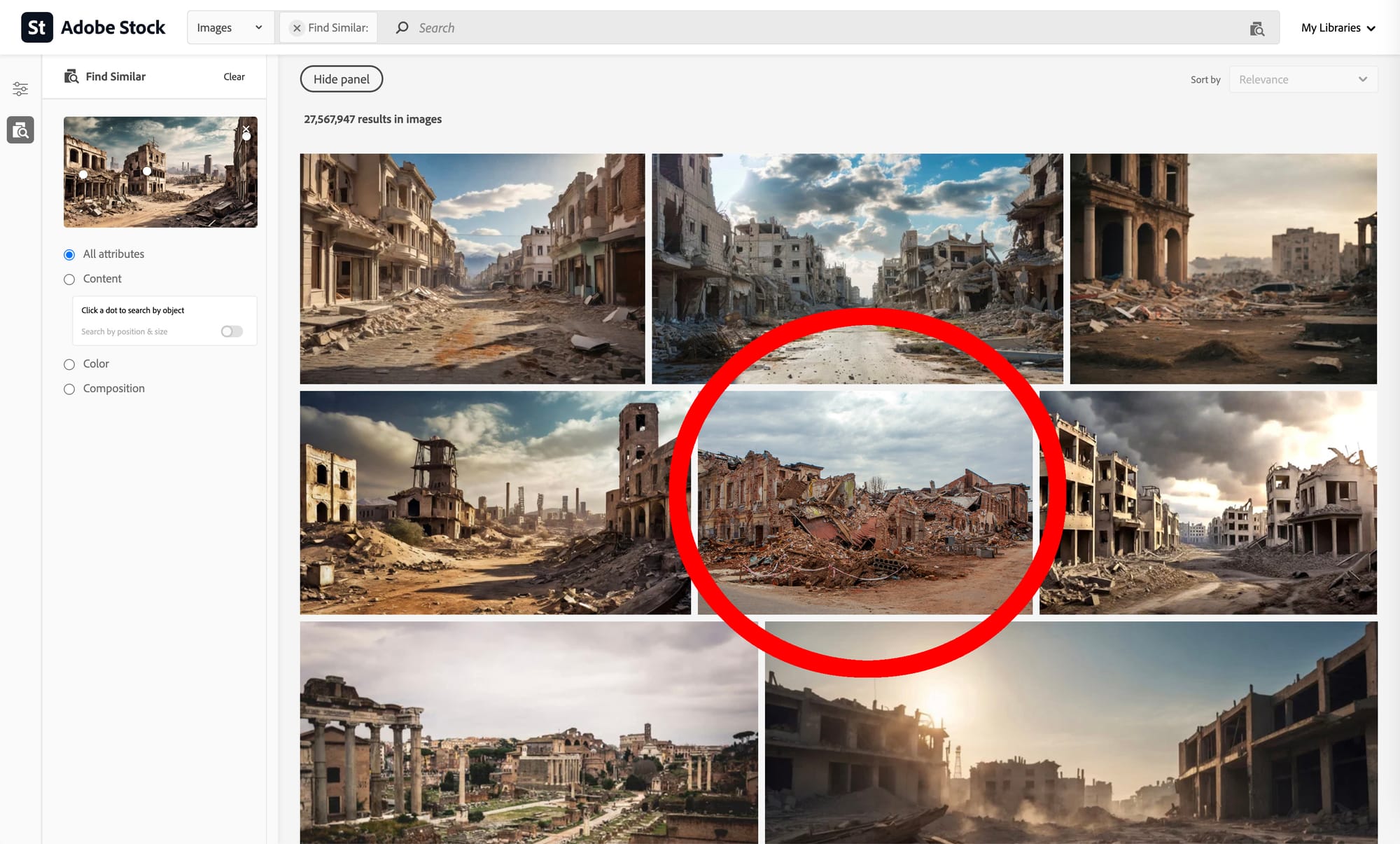

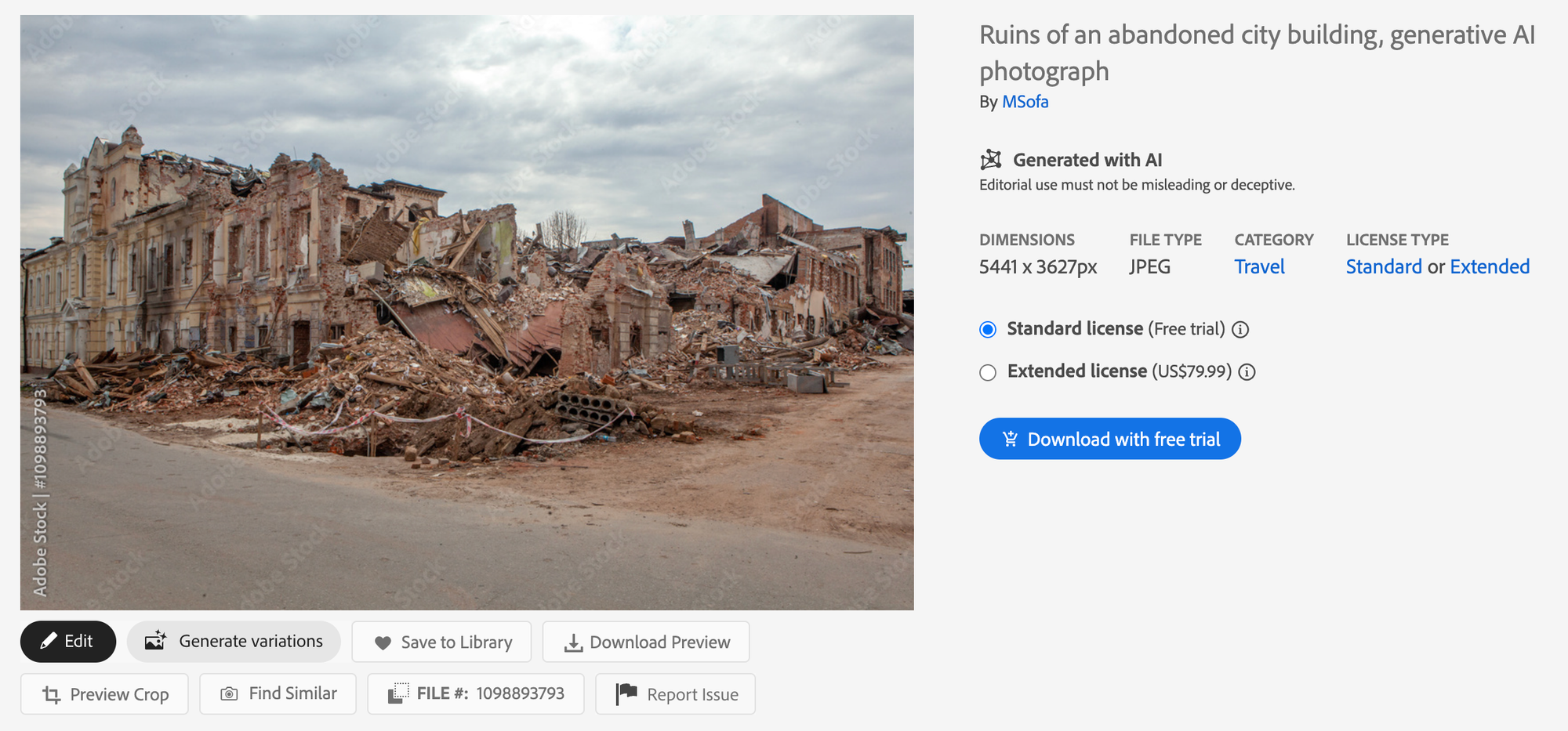

For the past couple months, I have been working on an article about recent developments in Russia’s war in Ukraine. In order to illustrate the piece, I scrolled through stock image websites to find photography of the conflict. While I was scrolling through the hundreds of licensable photographs of destroyed buildings, tanks with flags and portraits of refugees and survivors, I came across an image that caused a different kind of concerned panic for me.

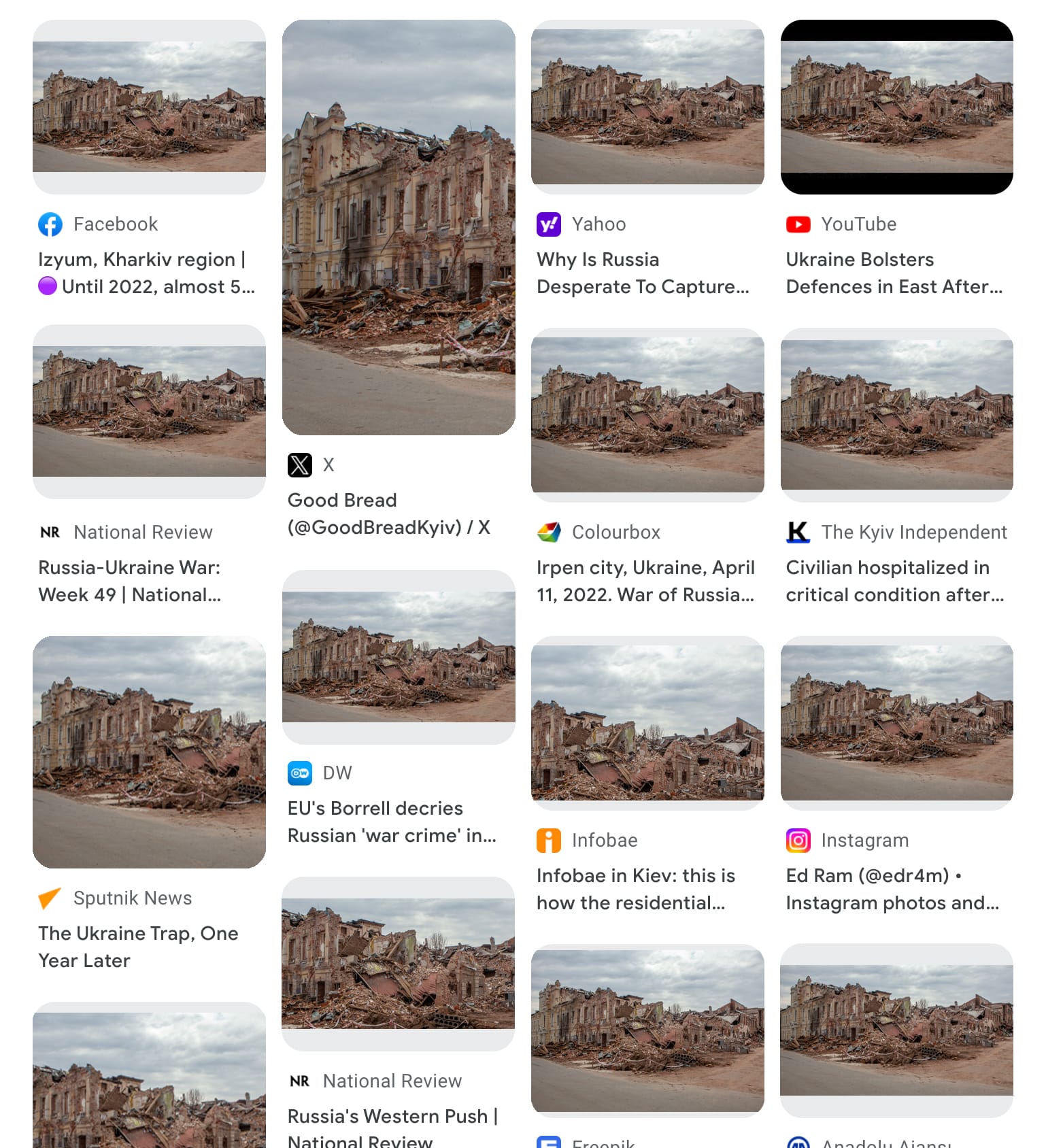

I found an image of what looked like an apartment block reduced to rumble. Pieces of debris are littered around the hole left from shelling. I noticed that the image was tagged as “AI Generated.” With this knowledge I looked closer and started to notice image anomalies consistent with AI generated imagery: lighting was too perfect looking like stage lights but outdoor daylight at the same time, the geometry of debris didn’t quite make sense. All common glitches of AI systems. Upon further inspection, it appeared to have been downloaded a number of times. I then used Google image search to find that the image had been used in a number of blogs and news articles without any mention that it was AI generated.

In the past year, AI generated images have accelerated to a lifelike quality and have begun to infiltrate all areas of media and visual environments. The apparent truthfulness of photography has been thrown into question with the influx of synthetic imagery becoming indiscernible from authentic documentation of events. While these formal questions of aesthetics and meaning are interesting topics of debate in the art world, it becomes much more difficult and dangerous in the field of war photography showing human rights abuses. Last fall, there was a big controversy around Adobe selling AI images depicting Israel’s destruction of Gaza. I was surprised to see the same kind of ‘fake war photo’ being circulated around conflict in Ukraine.

Since the beginning of Russian President, Vladimir Putin’s invasion of Crimea in 2014, he has used disinformation tactics to soften the resolve of occupied populations and international pressure. Photorealistic AI images have become ammunition in the information war against accountability. It is for this reason that I think it is paramount to be vigilant against the circulation of AI images depicting war or being passed off as evidence of the very real destruction happening around the world.

As AI systems get better and better at realistic generations this challenge becomes much more difficult. And while the promoters of the new consumer-ready AI services such as OpenAI’s ChatGTP, Midjourney and RunwayML use the rhetoric of inclusiveness and egalitarian access to technology, the distrust created by these images inevitably serves the powerful perpetrators of atrocities. It is paramount to expose and debunk AI image use in a documentation context or there is a real existential risk of undermining or sense of reality, gravitas of war images and a democratic free press.